For the video version of this post, click here.

Theres a new drug on the market that is either A) the greatest revolution in womens sexual health since oral contraceptives or B) a shining example of the FDAs ineptitude when it comes to drug approval.

If youve watched TV, listened to the radio, or, basically, been awake at all for the past week, youve heard of the FDA-approved drug flibanserin, marketed as Addyi.

Like all things that have to do with sex in the United States, Addyis approval has been very controversial. Emotions run high on both sides of the issue, but few stories address the data that led to approval in the first place. In this in depth look, well examine the numbers and arm clinicians with the information you need when patients arrive asking for the little pink pill.

Lets get one thing out of the way quickly. This is not female Viagra. It is not an as-needed drug. It is actually a complex serotonin receptor ligand that is taken daily. In fact, it started off life as an antidepressant.

Studies of its antidepressant effects were not compelling enough for Boehringher-Ingelheim, the drugs developer, to move forward.

But there was an interesting side effect. Some women in these studies described increased sexual desire and more frequent sexual activity. Sensing a potential goldmine, BI ran several randomized controlled trials to demonstrate the drugs efficacy and safety. These trials all have somewhat unfortunate acronyms DAISY, VIOLET, and BEGONIA.

These trials enrolled a total of around 2400 pre-menopausal women with hypoactive sexual desire disorder or HSDD. They all had to be in monogamous and, for some reason, heterosexual relationships. HSDD is characterized by low sexual desire that is disturbing to the woman and that can not be explained by factors like medications, psychiatric illness, problems in the relationship, lack of sleep, etc. The HSDD also had to be acquired there must have been a prior period of normal sexual functioning.

BI needed to show the FDA two things to prove the drug works. 1 that the drug increased desire. And 2 that the drug actually increased sexual activity.

The FDA requires two randomized trials for drug approval. DAISY and VIOLET were supposed to be those trials, but there was a problem. Flibanserin significantly increased the number of sexually satisfying events

But it did not increase a daily desire score as captured by an E-diary entry. Failing to meet both endpoints, the drug was denied approval by the FDA in 2010. Boehringer Ingelheim then sold the drug to the small startup Sprout Pharmaceuticals.

One of the secondary desire metrics, however, was positive in these trials, so the BEGONIA trial used sexually satisfying events and the female sexual function index desire score as its co-primary endpoint. The desire score here comprised two questions, take a look:

In the BEGONIA trial, both of these outcomes favored flibanserin over placebo:

You can see in the charts that you get about a half a point improvement in desire over placebo, and maybe one sexually satisfying event per month compared to placebo.

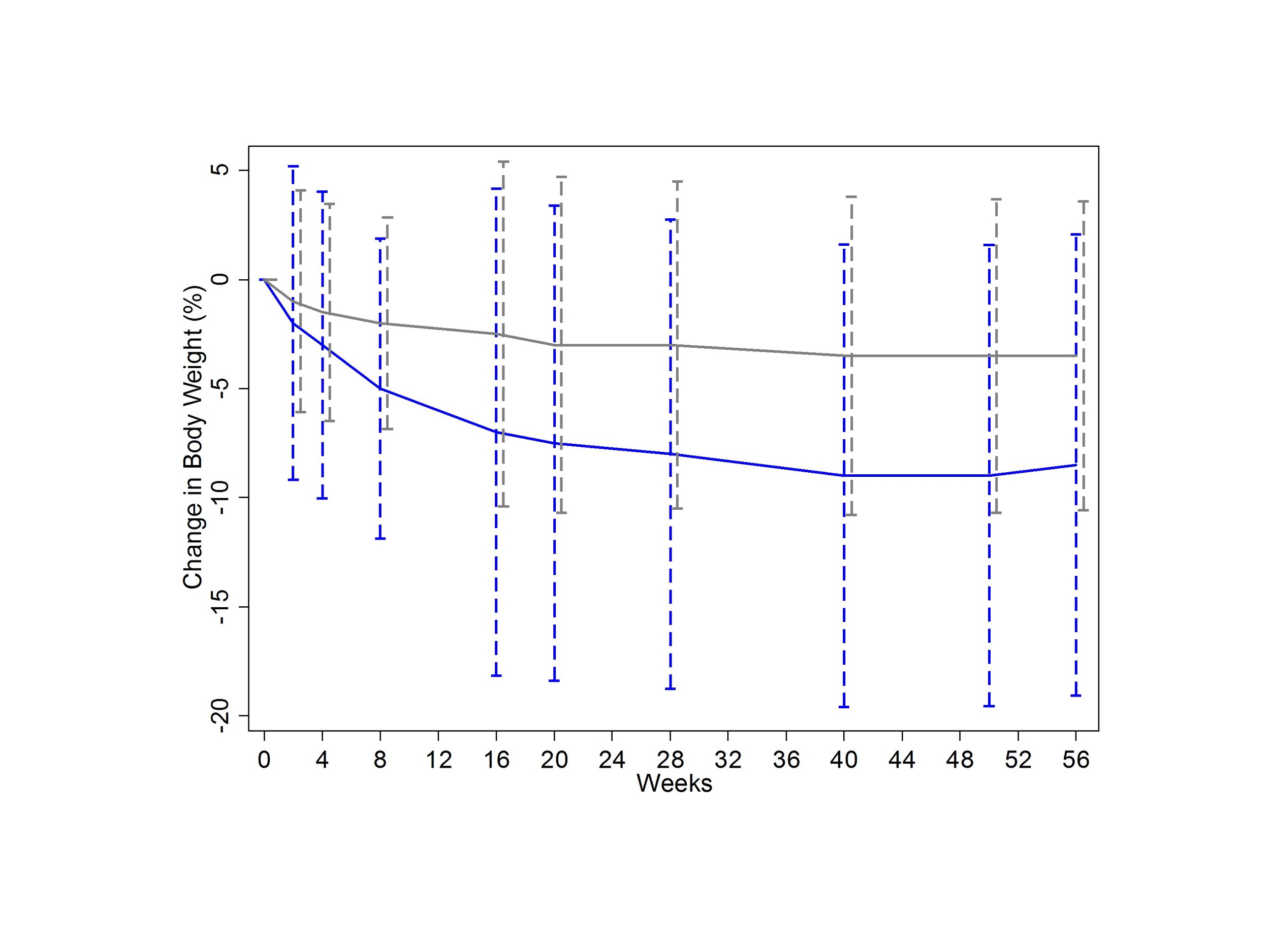

But the average performance of a drug often doesnt give you a sense of what the range was like. I was able to reconstruct the distribution of change in sexually satisfying events using some data from one of the trials:

Here Red is placebo, green is flibanserin. The important thing to note is that, though the average flibanserin-taker gained 2.5 SSEs per month, the range was quite variable.

Now armed with a positive trial, FDA approval was again sought and again denied, this time due to concerns over side-effects.

Twice as many women randomized to flibanserin stopped the drug due to adverse events than did those taking placebo:

Central nervous system depression: somnolence, fatigue, or sedation, were seen in 21% of the women on flibanserin compared to just 7% of placebo-treated patients.

Pharmacokinetic studies nicely demonstrated that CYP3A4 inhibitors like fluconazole and grapefruit juice increased the blood levels of flibanserin. These are pretty easily avoided. Oral contraceptives are also mild inhibitors of these enzymes, and it does look like the somnolence side effects of the drugs are exacerbated by OCP use.

And then there is alcohol. If theres one thing you hear about this drug its dont mix it with alcohol. Whats the data? Well, theres not much.

At FDA urging, a small study of 25 individuals (almost all men) was conducted to measure the effect of combining flibanserin with alcohol. As you can see, mixing the two resulted in a synergistic drop in blood pressure. In that study, 5 people (or 20%) had a severe adverse event (mostly severe somnolence).

Its worth noting that flibanserin alone was more likely to cause somnolence than either low or high-dose alcohol alone.

You hear a lot about fainting in the news coverage of flibanserin. How many women passed out in the phase three trials? 14 out of around 2400. Ten were taking the drug, and four were taking placebo.

Not to be denied, Sprout pharmaceuticals did two things. First, they did more studies to characterize the drugs safety profile.

For example:

The FDA commissioned a driving study to be sure that women who take this drug at night are safe to drive the next day.

Flibanserin is green here and what you see is basically that the drug has no effect on cognition or reaction time. Sleepy, yes. Dangerous? Probably not.

The second thing Sprout did is to start the even the score campaign. Funded by Sprout pharmaceuticals, this was a marketing campaign directed squarely at the FDA in order to pressure drug approval.

The idea here was that there were all these drugs for male sexual dysfunction and none for female sexual dysfunction, and that somehow reflected bias at the level of the FDA. Female sexuality is something our society does not handle particularly well, so I get the need for movements like this, but the fact that it was funded by the very people likely to benefit financially from it does feel a bit, well, distasteful.

But shady marketing practices doesnt mean the drug is bad any more than the presence of real gender bias in society makes the drug good.

So is the drug good? Thats the billion dollar question. The practical answer is that its up to the woman taking it. Is one additional sexually satisfying experience a month worth the side effects (which, contrary to the popular media portrayal, seem to be rather mild)? Well, they asked the women in the Begonia study how much their HSDD had improved. Here are the results:

All told, about 50% of the women taking flibanserin felt that it benefited them. Just under 40% of the women taking placebo felt that way. This leads to my major prediction for this drug. Despite the side-effects, it will be popular. In real-life, there are no placebo controls. 50% of women will feel better. And yes, some of that will be due to the placebo effect.

The real problem with these studies, though, is not that flibanserin is a risky drug. The problem is that the control group got placebo. My question isnt whether the drug works better than placebo, my question is whether it works better than sex therapy and / or couples therapy. If that study ever gets done, it probably wont be run by Sprout Pharmaceuticals.

*Thanks to PhDecay (follow her on twitter here) for her advice with this article.